A Summary Profile of Pathogen Detection Technologies

The elimination of disease-causing microbes from the food supply is a primary, compelling and shared objective of all responsible food processors. An incident of morbidity or mortality associated with a foodborne pathogen can be disastrous for the consumer, the product and the brand linked to the outbreak as well as to the future commercial viability of the processor’s business.

Regulatory source tracking has reached a level of technical sophistication and effectiveness to nearly ensure identification of the item and producer responsible for public health issues. The estimated costs associated with a product recall, the subsequent processing correction and the extended impact on brand value now reach into the tens of millions of dollars. However, these costs do not account for any potential litigation that may arise from the incident. Many companies never recover financially from a foodborne illness outbreak associated with their product.

Historically, pathogen reduction efforts focused on the pre-release screening of finished product. Lots that produced negative test results moved forward into distribution, while those with multiple positive test results were either re-processed or discarded. While this post-process focus reduced the release of contaminated finished product, it did little to prevent processing failure or improve production efficiency.

Today, more effective food safety efforts strive to eliminate pathogens by focusing on the entire processing continuum—from pre-process raw-material screening to Hazard Analysis and Critical Control Point (HACCP)-driven process control and, finally, to limited finished-product screening. If pathogens can be prevented from entering the production process through raw materials, and critical microbial controls are engineered into the process while their effectiveness is regularly monitored, then the finished product will likely be within design specifications. The current food safety focus is on prevention and process control, not post-process failure.

The successful implementation of HACCP plans throughout the food industry, enabling effective process control, has significantly reduced the need for extensive finished product screening and costly release holds. Measurable reduction in the incidence of several foodborne pathogens (e.g., Listeria monocytogenes) has resulted from this shift in focus, as documented by the Centers for Disease Control and Prevention.

Pathogen Testing

A key component in the pathogen elimination process is the pathogen test method. It is a tool applied across the entire production continuum—from the screening of pre-process raw materials to the monitoring and verification of CCP effectiveness and, finally, to the release of a safe finished product. It is used to verify sanitation effectiveness throughout the production environment, including the all-important food-contact surfaces, as well as monitoring the status of in-process material. It provides information that is critical to understanding process control and the success or failure of food safety efforts.

The technologies applied to current pathogen test methods are varied and reflect the evolution of microbial diagnostic science over the past century. Options range from conventional cell culture standards to more recent innovations in immunological, molecular and spectrometric applications. The intent of this article is not to focus upon specific manufacturers’ offerings but to profile the basic technologies used in today’s commercial platforms. An understanding of the available technologies and their comparative benefits, relative to the reader’s needs, may assist in the process of selecting the appropriate test method for the reader’s food safety and pathogen elimination efforts.

The evolution of rapid pathogen-test methods over the past quarter-century has added diagnostic value to food safety and process control efforts, providing more timely access to microbial data and indications of emerging product and process issues. Improved pathogen detection capability has enabled Quality and Operations Management personnel to identify, respond to and correct problems proactively, working to prevent finished product failure. New methods have created more options for processors and have necessitated the development of performance criteria to aid in the method selection process: that is, on what basis does a processor compare a variety of test methods and make an appropriate, knowledge-based selection?

Three such criteria have emerged uniformly across the food industry—speed, accuracy and ease-of-use. The former compares “total time to actionable result” (TTAR), the amount of time that elapses between sample availability and a test result. Accuracy, in brief, can be defined as the new method’s agreement with official test methods or gold standards. These comparisons are generally made on the bases of sensitivity and specificity, inclusivity and exclusivity, limit-of-detection (LOD), false-positive and false-negative rates and other parameters. Finally, ease-of-use relates to the level of technical expertise required to conduct the test method and the extent of materials required (e.g., instruments and consumables). In profiling the four detection technologies currently available—cellular, immunological, molecular and spectrometric—this article will additionally provide comparisons based on the selection criteria cited above.

Which Test to Choose?

Dating to the earliest days of microbiology, cell culture detection and recovery methods for pathogens have evolved in complexity, selectivity and effectiveness since the late 19th century. To the extent that they ideally produce a visible bacterial colony as their endpoint, cell culture methods have long been considered the gold standard of microbiology.

In brief, the method involves the suspension of a sample in solution; the preparation of an optimal bacterial growth medium with appropriate nutrients, pH level and metabolic indicators; the mixing or plating of the sample and media in a petri dish, tube or similar vessel; the incubation of the mixture at an ideal bacterial growth temperature for a prescribed period of time; and finally, a quantitative assessment of the presence or absence of countable bacterial colonies. Adjustments to any of the above method components—nutrients, pH level, indicators, incubation time or temperature—may be made to alter the selectivity and outcome of the assay. Fastidious bacterial targets, such as Listeria spp., may require multiple enrichments and platings in a variety of selective media to reduce competitors and enable recovery of the organism.

From the standpoint of speed, cell culture methods may require from several days to a week or more to generate confirmed and actionable test results. In terms of accuracy, many are considered official, confirmatory and/or gold standard procedures—that is, a visible colony of the target organism is produced. The ease-of-use of cell culture methods is arguable. A trained lab technician, exercising good laboratory practices (GLPs), is needed for the sample and media preparations, the multiple test manipulations and the interpretation of results. With the exception of a scale, glassware, water bath, autoclave and incubator, most of the materials used in cell culture test methods are consumable and disposable. Many media are also commercially available in pre-prepared, ready-to-use formats, in addition to pre-poured, sample-ready plates. At least two companies now offer a sample-ready film format that is pre-coated with the appropriate desiccated media and simply requires sample inoculation and incubation.

The award-winning work of Philip Perlmann and Eva Engvall on immunoassays in 1970 at the University of Stockholm enabled the emergence one decade later of commercially available, enzyme-linked immunosorbent assay (ELISA) technology for rapid foodborne pathogen detection. Based on antigen/antibody-binding affinity, the most common application of ELISA technology is the “sandwich assay.” Antibodies with high specificity to the target pathogen are anchored to a surface—for example, the sides of a micro-titer well. The food or environmental sample to be assayed is placed in solution and dispensed into the well. If the target antigen is present, it will bind to the affixed antibody. After the sample is rinsed away, the antigen bound to the fixed antibody remains. Antibodies that are conjugated with an enzyme are then added to the well. If the target antigen is present on the bound antibody, then the conjugated antibody will bind to that complex, forming an “antibody-antigen-antibody sandwich.” The unbound, conjugated antibody is rinsed away and a substrate is added to the well. If the enzyme linked to the second antibody is present, then a colorimetric or fluorescent reaction will occur. The intensity of this signal determines the presence or absence of the target in the original sample—a positive or negative test result.

The detection limit of the ELISA method is in the neighborhood of 104–106. In order to detect a single target cell, the sample must be enriched prior to screening, elevating the target to a detectable level. Depending on the target organism, enrichment procedures currently run from eight to 48 hours. Therefore, the speed of ELISA technology is determined by the target and the duration of the sample enrichment.

The technology’s accuracy compares favorably with official cell culture methods according to method approvals granted by the Association of Analytical Communities (AOAC INTERNATIONAL). The incidence of false-positive results may be marginally higher due to cross-reactivity with non-target organisms.

As with cell culture methods, ELISA’s ease-of-use is arguable. As indicated above, a trained laboratory technician is also recommended. A high degree of automation has now been incorporated into the technology, enabling the hands-free running of multiple samples simultaneously. Required materials depend upon the level of automation—ranging from simple and disposable lateral-flow devices to complex instrumentation in the $25,000–50,000 range. The cost-per-test for consumables, including enrichments and reagents, is in the $4–6 range.

In 1983, while a chemist with Cetus Corporation, Nobel Prize laureate Kary Mullis perfected the polymerase chain reaction (PCR), ushering in a diagnostic revolution in molecular biology. PCR technology is based on the use of heat-resistant polymerase from Thermophilus aquaticus (Taq) for the exponential amplification of target DNA sequences that have been captured by primers specific to the target’s DNA.

In brief, the procedure involves the following four steps: denaturation, annealing, amplification and detection. Via high heat in a thermocycling instrument, the sample’s DNA is denatured, or split into single strands. The primer, with the target’s specific DNA sequence, anneals or binds to the single DNA strand if the target sequence is present during a cooling phase. The Taq polymerase causes exponential amplification or replication of the bound DNA sequence with additional thermocycling. The detection of the amplified target DNA can be achieved through various methods, including agarose gel electrophoresis and melting curve analysis of intercalating dyes. Single-cell detection is dependent upon sample enrichment to a target level of 104. Therefore, as with ELISA, the speed of PCR technology is determined by the target and duration of sample enrichment, which currently ranges from eight to 48 hours. PCR accuracy compares favorably with official cell culture methods and may arguably be better, given the technology’s highly specific DNA basis. A number of PCR-based offerings have received AOAC approval. Again, a trained lab technician is recommended. Significant automation has been incorporated into the commercial offerings, enabling multiple and simultaneous sample analysis. Instrument costs are in the $50,000 range, while combined sample enrichment and reagent cost-per-test is in the $5–10 range.

Finally, in the recent and/or emerging rapid pathogen detection landscape, there are spectrometric or laser-based diagnostic technologies that are either currently available or under development. Grouped chiefly around flow cytometry or Raman spectroscopy, both utilize lasers and chemistry to generate unique, target-specific spectral signals. Although neither of these technologies is new—Nobel Prize laureate C.V. Raman discovered the “Raman radiation effect” in 1928—their application to “real-time” foodborne pathogen detection and enumeration is innovative.

In flow cytometry, the sample is hydrodynamically focused to collimate bacteria as they pass through a flow cell in which they are illuminated by a laser. Bacterial size can be discerned from low-angle light scatter, while high-angle scatter enables discrimination of intercellular granularity. At the flow cell, a light source may also be used to excite nucleic acid stains or probes, or a variety of tags conjugated to proteins with an affinity to specific bacteria. Due to the absence of sample enrichment, the potential speed of spectrometric technology may be reduced to hours or minutes. The accuracy of the offerings under development and their correlation with official cell culture methods have not yet been published. System usage would appear to have requirements similar to the previously described technologies; that is, a trained lab technician following GLPs. Flow cytometry instrument costs are in the $50,000–75,000 range, while consumable reagents may fall into the $10–20 range.

Pathogen testing is a key component and critical success factor in effective food safety and process control programs. As multiple diagnostic technologies and formats are available, one must determine beforehand the specific performance criteria that will fulfill one’s operational needs. The criteria considered above are speed, accuracy and ease- of-use. The cost of product or process failure can be staggering, but is entirely avoidable with sound planning and effective execution.

Read the sidebar "Quantifying Pathogen Testing"

A. Crispin Philpott has spent many years focused on global food safety and quality issues, primarily in sales and marketing leadership roles within a number of leading and emerging food diagnostic businesses, including 3M Company, DuPont Qualicon, Fisher Scientific and others. For two decades, he has been an active participant in the promotion of improved detection technologies for product safety and process control, including innovative microbiological, immunological, molecular and spectroscopic methods of analysis. He can be reached at 610.696.4405 or acphilpott@aol.com.

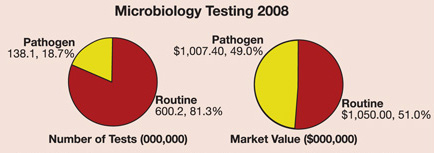

In a recently released market research report entitled Food Micro—2008 to 2013, Strategic Consulting estimates that over 738 million microbiology tests were performed in 2008 by the food processing industry worldwide. Routine microbiology tests [total viable organisms (TVOs), coliform, yeast, etc.] accounted for more than 80% of these tests.

In 2008, food processors around the globe ran approximately 138 million tests (in total) for a variety of pathogens, which had a market value of over $1 billion. Of this total, tests for Salmonella were the most frequent, followed by tests for Listeria, E. coli O157 and Campylobacter.

For further information about this report, please visit www.strategic-consult.com or contact Tom Weschler at 802.457.9933 or weschler@strategic-consult.com. >

Looking for quick answers on food safety topics?

Try Ask FSM, our new smart AI search tool.

Ask FSM →